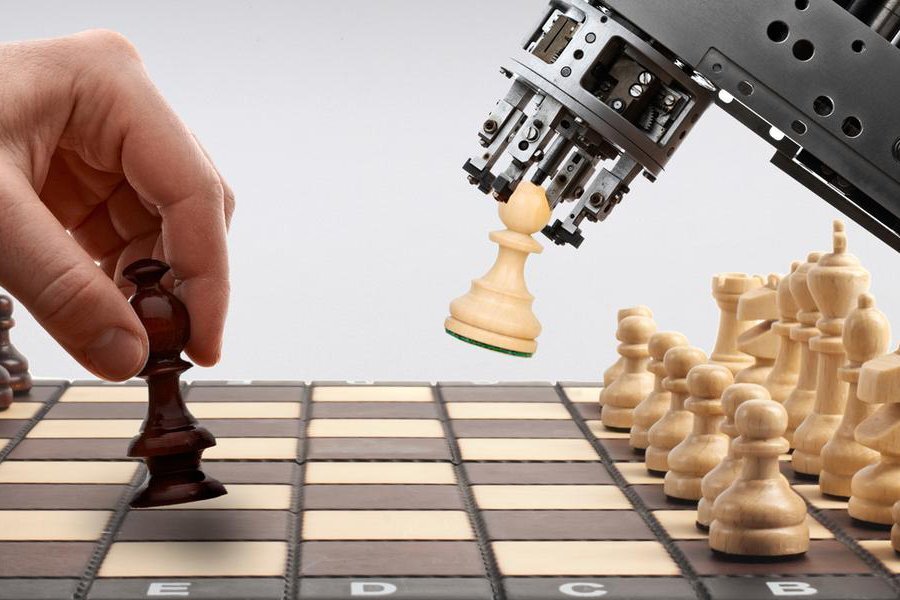

Much before automation and artificial intelligence invaded our world and replaced half our tasks, machines encroached the tightly fenced world of chess to challenge the human brain with their chipped intellect.

On February 10, 1996, the history of chess took a sharp turn, when for the first time a computer beat a grandmaster, the then chess champion at his own game.

IBM’s prodigy-computer Deep Blue beat the legendary Garry Kasparov in the first game of a six-game match. It was a moment when machine intelligence wallowed in its glory!

Out of the 6 games, two matches ended in draws. Kasparov faced Blue again next year, only to lose the match. New equations were carved into the armours of the board game as researches on machine intelligence gave birth to computer programs that could consider an average of 200 million positions per second.

Man and the Machine: the evolution of computer chess

The idea of man v/s machine isn't a seed of the twentieth century, In fact a Spanish scientist Leonardo Torres y Quevado, came up with an electromagnetic device in 1890: a device made of wire, switches and circuit that could engage in a simple game of chess. The intention was to checkmate a human opponent in a simple endgame: king and rook versus king.

This predecessor of computer chess wasn't brainy enough and clumsy with the moves. Often over 50 moves were required to accomplish a task than an average human opponent could finish in less than 20.

But the device was capable of recognizing illegal moves and diligently delivered checkmate eventually. Though Quevado himself negated any practical purpose for the apparatus, it received much attention as an impressive attempt to program a machine to perform the human way.

A later and more innovative discovery was that of the electronic digital machine, following the Second World War. In 1947, Alan Turing, from the University of Manchester, England, designed the first basic program with the potential of analyzing one ply (one side’s move) in advance.

The following year, Claude Shannon, the American research scientist at Bell Telephone Laboratories, New Jersey, presented a paper that inspired a generation of future programmers.

Shannon believed, much in the line of his predecessors, that developments in a chess-playing program could open new frontiers - like machines that could translate, forecast the weather, or involve in strategic military operations.

As per his research, a computer handling an entire chess game could not scrutinize all the positions that lie (possibly 40 or 50 moves) ahead that lead to checkmate. It would have to make decisions by relying on incomplete information, Thus, selecting good moves would often mean successfully evaluating future positions that were not checkmated.

Shannon’s paper drew out criteria for assessing each position a program would consider. They were significant as even a fundamental program demanded evaluating the relative difference between numerous positions.

In a typical chess position, White could have 30 legal moves, each of which could be reciprocated by 30 possible responses by Black. So a machine that is considering White’s best move would have to examine 30x30 or 900 positions as part of Black’s reply, a two-ply search. A three-ply search (initial move by White, a Black reply, and a White response to that) would involve 30x30x30 or 27,000 different final positions to be considered.

The Face-Off : Chess v/s Chipsets

Computers began their real challenge against humans in the late 1960S. In the 1967 U.S. Chess federation tournament, a program named MacHack VI, written by an MIT undergraduate, Richard Greenblatt drew one game and lost four to a human opponent.

Computers of the 1960s were limited in assessing positions and could evaluate no more than two moves ahead. But researches estimated that a program’s performance level could be influenced and increased by 250 rating points by every additional half-move of search.

Through the late 70s, the evolution of microprocessors fuelled the progress in computer programming. In 1970, Chess 3.0, a program developed by some Northwestern University researchers, won the first American computer championship. And by the late 1980s some of the strongest programs in chess computing were beating more than half of the world’s eminent players.

In 1988, a computer program named Hi-Tech, from the cradle of Carnegie Mellon University, defeated Arnold Denker ?(a grandmaster), in a short match.

The program was capable of considering up to 1,75,000 positions/second - one for each square on the board- and used 64 computer chips. A later improvisation on Hi-Tech by another Carnegie Mellon student, Feng-Hsiung Hsu, won the North American Computer Championship in 1987,

This custom-designed chip (aptly named Chipset), was the earliest avatar of Deep Blue that defeated Garry Kasparov in 1996.

In 1988, Chipset, reincarnated as Deep Thought- a powerful program that defeated the grandmaster, Bent Larsen, in a tournament game the same year.

Though a rank below the human grandmasters and its ancestor Hi-Tech in evaluation skills, Deep Thought was capable of considering 700,000 positions a second and played above the 2700 level.

Deep Thinking: The Battle that changed History

At the onset of the 90s, International Business Machines Corporation (IBM), decided to sponsor Deep Thought for a non-compromised battle for victory against the world’s best human player in a traditional time limit.

The primary progress was in the computer that ran the chess program. IBM came up with a sophisticated and innovative multiprocessing system that it tested using chess. They used 32 microprocessors for the system, each with six programmable chips designed exclusively for chess, The program was later employed for accurate weather forecasting during the 1996 Olympic Games in Atlanta, U.S.

In 1991, Deep Thought, remodelled and rechristened as Deep Blue, was ready to take the world Champion head on with an equivalent of a 3000 rating (against Kasparov’s 2800).